Langfuse Alternatives? Langfuse vs Helicone

![]()

Introduction

As the use of Large Language Models (LLMs) becomes increasingly prevalent in various applications, the need for robust observability tools has never been more critical. These tools help developers and teams monitor, analyze, and optimize their LLM-powered applications. While several options are available in the market, many developers and organizations are exploring alternatives that offer unique features or better suit their specific needs. In this comparison, we'll look at two notable players in the LLM observability space: Helicone and Langfuse, focusing on their distinct features and capabilities.

Quick Comparison

Here's a quick overview of how Helicone compares to Langfuse:

| Aspect | Helicone | Langfuse |

|---|---|---|

| Best For | Teams wanting proxy-based monitoring or SDK integration | Teams focusing on SDK-first integration |

| Pricing | Free tier available, flexible pricing | Free tier available, flexible pricing |

| Key Strength | Comprehensive features, High scalability, Cloud-focused | Self-hosting ease, Open-source |

| Drawback | More complex self-hosting setup due to distributed architecture | Single PostgreSQL database may limit scalability |

Overview: Helicone vs. Langfuse

| Feature | Helicone | Langfuse |

|---|---|---|

| Open-Source | ✅ | ✅ |

| Self-Hosting | ✅ | ✅ |

| One-Line Integration | ✅ | ❌ |

| Caching | ✅ | ❌ |

| Prompt Management | ✅ | ✅ |

| Agent Tracing | ✅ | 🟠 (Limited at scale) |

| Prompt Experiments | ✅ | ✅ |

| Evaluation | ✅ | ✅ |

| Transparent Pricing | ✅ | ✅ |

| Image Support | ✅ | ✅ |

| Scalability | ✅ | ❌ |

| Data Export | ✅ | ✅ |

| Cost Analysis | ✅ | 🟠 (Limited at scale) |

| User Tracking | ✅ | 🟠 (Limited at scale) |

| User Feedback | ✅ | 🟠 (Limited at scale) |

Use Case Scenarios

Different tools excel in different scenarios. Here's a quick guide to help you choose the right tool for your specific needs:

-

Teams Needing Proxy-Based Implementation

- Best Tool: Helicone

- Why: Offers robust proxy architecture with caching and edge deployment capabilities

-

Teams Requiring Deep Tracing & Evaluation

- Best Tool: Consider both

- Why: Both platforms offer comprehensive tracing and evaluation capabilities

-

Simple Self-Hosting Setup

- Best Tool: Langfuse

- Why: Single PostgreSQL database makes deployment straightforward

-

Highly Scalable Self-Hosting

- Best Tool: Helicone

- Why: Helm chart with distributed architecture with Cloudflare Workers, ClickHouse, and Kafka enables better scaling

-

High Volume LLM Usage

- Best Tool: Helicone

- Why: While Langfuse handles moderate scale, Helicone's distributed architecture is built to handle many millions to billions of requests

-

Enterprise with Complex Workflows

- Best Tool: Consider both

- Why: Both platforms serve enterprise customers with comprehensive feature sets

Helicone

Designed for: Developers & Analysts

![]()

What is Helicone?

Helicone is a comprehensive, open-source LLM observability platform designed for developers of all skill levels. It offers a wide range of features including advanced caching, custom properties for detailed analysis, and robust security measures. Helicone's architecture, built on Cloudflare Workers, ClickHouse, and Kafka, ensures high scalability and performance, making it suitable for both small projects and large-scale enterprise applications.

Top Features

- High Scalability - Built on a robust infrastructure to handle high-volume LLM interactions. Helicone has processed over 2 billion LLM logs and 1.6 trillion tokens.

- Advanced Caching - Reduce latency and costs with edge caching and customizable cache settings.

- Comprehensive Security - Protect against prompt injections and data exfiltration with built-in security measures.

- Flexible Integrations - Seamlessly integrate with popular tools like PostHog, LlamaIndex, and LiteLLM.

- Custom Properties and Scoring - Add metadata and scoring metrics for in-depth analysis and optimization.

How Does Helicone Compare to Langfuse?

Helicone differentiates itself from Langfuse by offering a more comprehensive feature set, higher scalability, and a focus on cloud performance. Its advanced infrastructure, built on Cloudflare Workers, ClickHouse, and Kafka, ensures superior performance for high-volume applications. With its one-line integration and clean UI, Helicone provides an accessible and user-friendly experience for developers of all levels.

Compared to Langfuse, which emphasizes ease of self-hosting, Helicone offers both self-hosted and cloud options, giving users flexibility without compromising on performance. Helicone's advanced caching, custom properties, and robust security features further enhance its appeal for complex and large-scale projects.

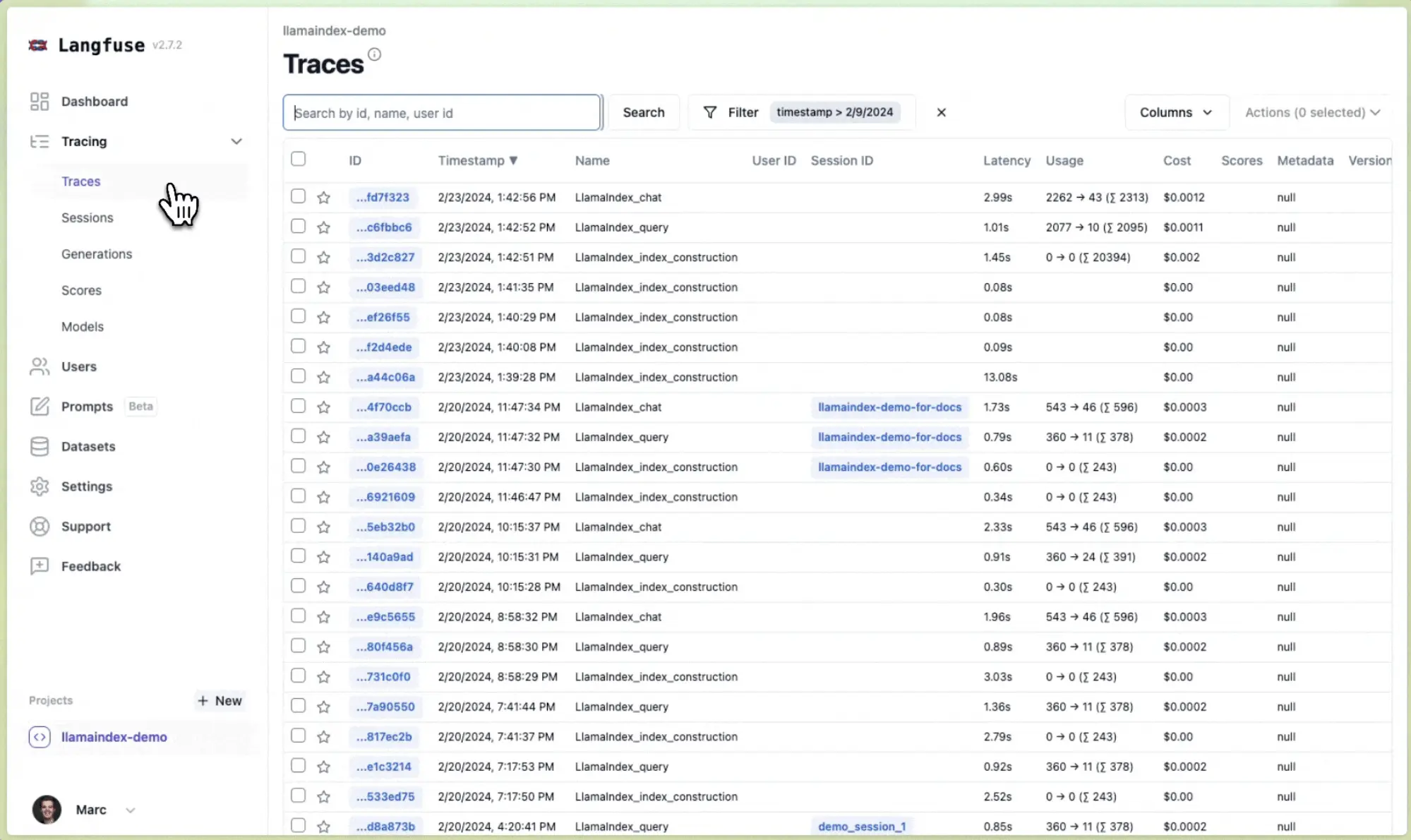

Langfuse

What is Langfuse?

Langfuse is an open-source LLM observability tool that offers tracing and monitoring capabilities. It emphasizes ease of self-hosting, making it accessible for small teams or individual developers who prefer to manage their own infrastructure. Langfuse runs on a PostgreSQL database, simplifying the self-hosting process but potentially limiting scalability for high-volume applications.

Top Features

- Self-Hosting Focus - Designed for easy self-deployment, allowing teams to manage their own infrastructure.

- Open-Source - Fully open-source, encouraging community contributions and customizations.

- Tracing - Provides tracing capabilities for LLM interactions, including session tracking.

- Prompt Management - Offers prompt versioning and management features.

How Does Langfuse Compare to Helicone?

Langfuse distinguishes itself by emphasizing ease of self-hosting and simplicity in setup. Its exclusive reliance on PostgreSQL as the sole database backend simplifies deployment and is well-suited for low-volume projects or developers who prefer managing their own infrastructure. This makes Langfuse an attractive option for teams or individual developers focusing on small to medium-scale applications.

However, as data volume increases, PostgreSQL may face performance limitations. Additionally, without a data streaming platform like Kafka, there can be challenges with scaling and data persistence; if the system goes down, logs may be lost.

Frequently Asked Questions

-

What is the main difference between Helicone and Langfuse?

The main differences lie in their focus and scalability. Helicone emphasizes cloud performance, scalability, and advanced features, making it suitable for high-volume applications. Langfuse focuses on ease of self-hosting and simplicity, which may suit smaller projects but can face performance issues as data scales.

-

Which tool is best for beginners?

Both Helicone and Langfuse are good for beginners. Helicone offers an easy start with its one-line integration and comprehensive feature set. Langfuse, while simpler to self-host, may require the use of an SDK but is still not overly complicated to set up.

-

Can I switch easily between these tools?

Yes, switching to and from Helicone is simple because it does not require an SDK; you only need to change the base URL and headers. On the other hand, Langfuse requires an SDK and code changes, making the switching process more involved.

-

Are there any free options available?

Yes, both Helicone and Langfuse offer free tiers, making them accessible for small projects or initial testing.

-

How do these tools handle data privacy and security?

Both tools take data privacy seriously and are SOC 2 compliant. They also offer self-hosting capabilities for higher compliance concerns, allowing you to keep all data on your own infrastructure if necessary.

Conclusion

Choosing the right LLM observability tool depends on your specific needs and priorities. Helicone offers a scalable, feature-rich platform ideal for applications ranging from startups to large enterprises, especially where high performance and advanced analytics are required. Langfuse provides a simpler, self-hosted solution suitable for smaller teams or low volume projects that prioritize managing their own infrastructure.

Other Helicone vs Langfuse Comparisons

- Langfuse has its own comparison against Helicone live on their website